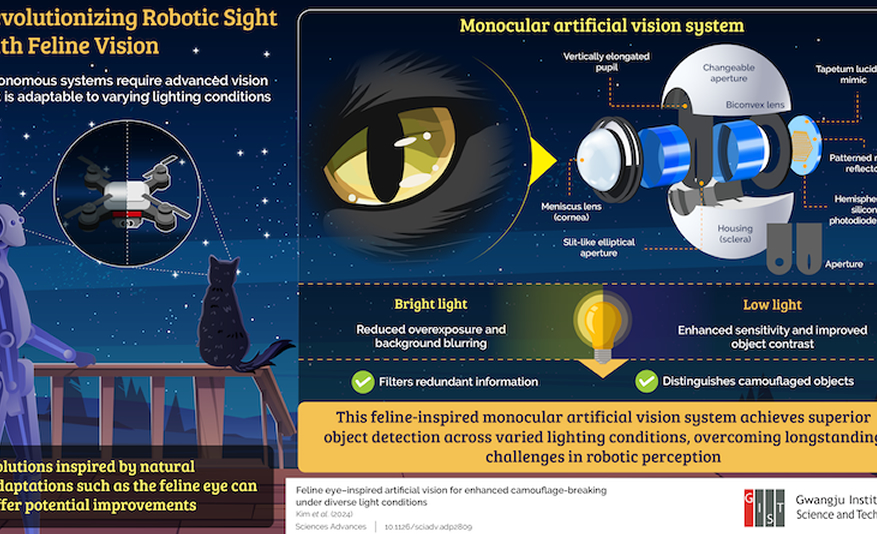

This week, Arteris announced a significant evolution in its network-on-chip (NoC) IP products, which they claim dramatically enhances the scalability, power efficiency, and performance of system-on-chip (SoC) architectures for artificial intelligence (AI) and machine learning (ML) workloads.

_IP.jpg)

Arteris’ FlexNoC and Ncore IP products can scale because of its cohesive tile integration, which supports both non-coherent and cache-coherent interconnects. These architectures ensure data flows efficiently between processing units, such as CPUs, GPUs, and neural processing units (NPUs). The mesh topology is central to this design, allowing each tile to connect with the network interface units (NIUs) and enabling low-latency communication across the SoC. Ultimately, the mesh handles the data transport and simplifies the layout and routing, making it easier for designers to expand the system to meet increased computational demands.

For example, in AI applications such as deep learning and natural language processing (NLP) that require parallel processing, NoC tiling allows system architects to expand the number of processing elements (PEs) without introducing bottlenecks. Each tile is configured to be identical in function, minimizing the complexity that typically arises in large-scale SoC designs. This streamlined integration means that the tiles can be scaled to handle higher data volumes and larger models without significantly increasing the design time.

Power Efficiency and Dynamic Frequency Scaling

As AI workloads become more demanding, optimizing power consumption while maintaining performance is necessary for any computing platform. Arteris' NoC tiling technology includes several features that contribute to significant power savings.

One of the mechanisms employed is dynamic frequency scaling (DFS), which allows different tiles within the network to operate at varying clock frequencies depending on their workload. When certain tiles are not required for processing, they can be powered down.

The tiling architecture also supports the division of tiles into different clock and voltage domains which allows designers to isolate specific sections of the SoC for independent power management. The mesh topology facilitates this by providing flexible connections between tiles, which can be dynamically activated or deactivated based on workload requirements.

For instance, in a neural network-based AI system, some tiles may handle less intensive tasks like preprocessing while others perform more compute-heavy tasks like model inference. By scaling the power consumption of individual tiles based on their real-time computational load, Arteris’ tiling technology ensures that the SoC maintains optimal power efficiency across various applications.

A Scalable Solution for AI Processing

AI compute applications have distinct requirements regarding scalability, latency, and power efficiency. Arteris’ NoC tiling innovation is well-positioned to meet these needs due to its modular architecture and enhanced data management capabilities.