In a strategic move that feels like it’s straight from an Aaron Sorkin movie, OpenAI has started crafting its own AI chip, a custom creation designed to tackle the heavy demands of running its advanced models. The company, known for developing ChatGPT, has partnered with Broadcom and Taiwan Semiconductor Manufacturing Company (TSMC) to roll out its first in-house chip by 2026, Reuters reports. While many giants might build factories to keep all chip manufacturing in-house, OpenAI opted to shelve that multi-billion-dollar venture. It’s instead using industry muscle in a way that’s both practical and quietly rebellious.

Why bother with the usual suppliers? OpenAI is already a massive buyer of Nvidia’s GPUs, essential for training and inference—the magic that turns data into meaningful responses. But here’s the twist: Nvidia’s prices are soaring, and OpenAI wants to diversify. AMD’s new MI300X chips add to the mix, showing OpenAI’s resourcefulness in navigating a GPU market often plagued by shortages. Adding AMD into this lineup might look like a mere “supply chain insurance,” but it’s more than that—this move exhibits OpenAI’s reluctance to put all its eggs in one pricey basket. Sort of like Apple developing its own Apple Intelligence while leaning on ChatGPT whenever necessary.

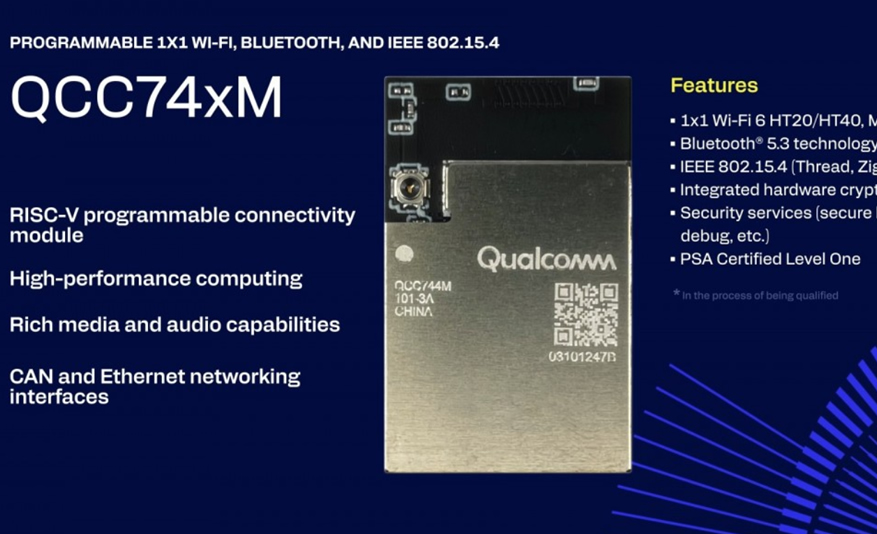

Broadcom is helping OpenAI shape the chip, along with a data transfer capability that’s critical for OpenAI’s needs, where endless rows of chips work in synchrony. Securing TSMC, the world’s largest contract chipmaker, to produce these chips highlights OpenAI’s knack for creative problem-solving. TSMC brings a powerhouse reputation to the table, which gives OpenAI’s experimental chip a significant production edge—key to scaling its infrastructure to meet ever-growing AI workloads.

OpenAI’s venture into custom chips isn’t just about technical specs or saving money; it’s a tactical play to gain full control over its tech (something we’ve seen with Apple before). By tailoring chips specifically for inference—the part of AI that applies what’s learned to make decisions—OpenAI aims for real-time processing at a speed essential for tools like ChatGPT. This quest for optimization is about more than efficiency; it’s the kind of forward-thinking move that positions OpenAI as an innovator who wants to carve its own path in an industry where Google and Meta have already done so.

The strategy here is fascinating because it doesn’t pit OpenAI against its big suppliers. Even as it pursues its custom chip, OpenAI remains close to Nvidia, preserving access to Nvidia’s newest, most advanced Blackwell GPUs while avoiding potential friction. It’s like staying friendly with the popular kid even while building your own brand. This partnership-heavy approach provides access to top-tier hardware without burning any bridges—a balancing act that OpenAI is managing with surprising finesse.