Road crash fatalities are an unacceptable but distressing reality of modern society. Notably, the U.S. has higher road fatality rates than many other Western countries. Sadly, many of these accidents are avoidable, with the Financial Times recently pointing to several potential contributing factors that appear more prevalent with U.S. drivers than other nations, specifically distracted driving through phone use, not wearing seat belts, breaking the speed limit and being under the influence while driving.

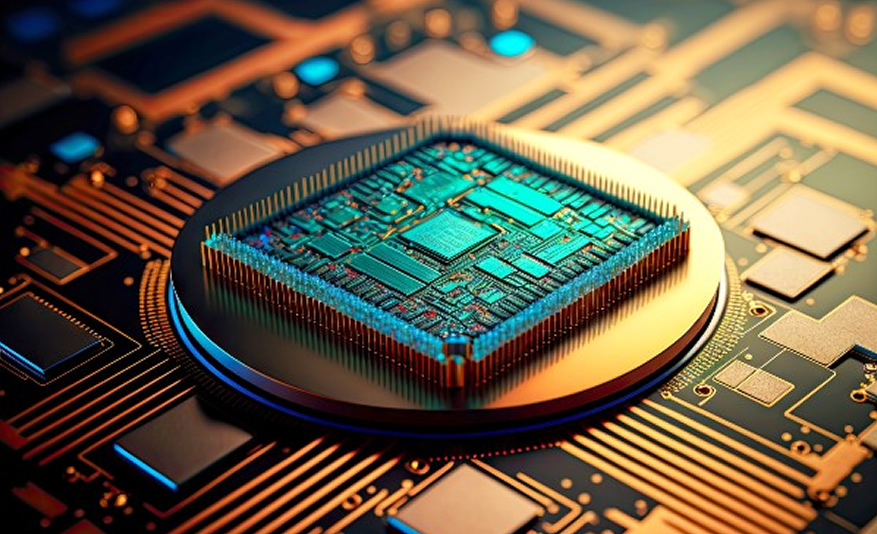

If the vision of the uncrashable car is to be met, a concerted effort by government regulators and the automotive industry are needed to drive the wider adoption and deployment of advanced driver-assistance systems (ADAS). ADAS technology—which includes capabilities such as automatic emergency braking (AEB), lane-departure warning (LDW) and forward-collision warning (FCW), blind-spot detection (BSD) and driver and occupant monitoring systems (DMS, OMS)—uses multiple sensors enabled by semiconductors to capture and transport critical data. That data is processed and used to complement human perception, and, if needed, directly actuate braking and steering systems to preserve occupant and road user safety.

As the numbers and modalities of sensors increase, automotive electrical and electronic (E/E) system architectures are being challenged to process more data in real time, operate with power efficiency and scale to meet the multiple platform needs of OEMs.

The complexities of sensor modalities

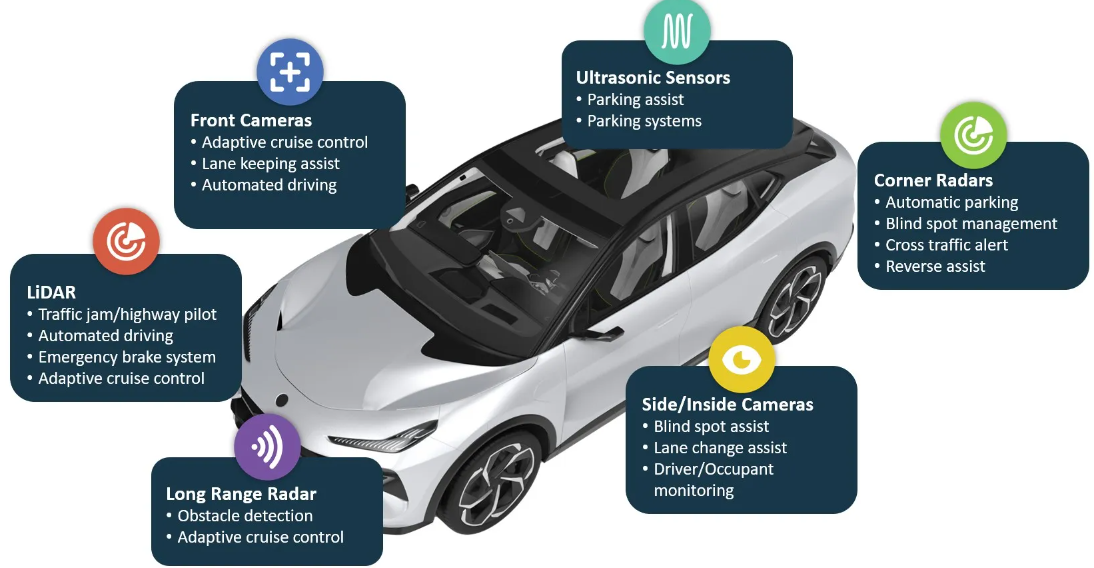

Common ADAS sensor types include computer vision, ultrasonic, radar and LiDAR, each of which has its own benefits and shortfalls. Radar, for example, provides excellent sensing ability of objects crossing or coming into a vehicle’s path across a range of weather conditions, but it has limited depth precision and object recognition capabilities. Conversely, cameras are extremely good at object recognition but can exhibit poor performance in adverse weather or lighting conditions. Ultrasonic sensors have good all-weather performance but are suited only to low-spatial-resolution, short-range applications such as automated parking. Finally, LiDAR—which excels in range, accuracy and depth precision and is unaffected in low light—may be impeded by heavy rain or fog.

To support ADAS applications such as AEB, LDW, BSD and DMS across the widest range of environmental conditions—and to enable a degree of redundancy—OEMs deploy multiple sensors and sensor modalities. This proliferation of ADAS sensors and necessary accompanying in-vehicle processing is transforming automotive E/E architectures. Additionally, perception systems, which interpret a vehicle’s environmental surroundings using the sensor data, are rapidly evolving as the sensors and compute capability of automotive system-on-chips (SoCs) improves.

Sensor fusion is the process whereby data from multiple sensors—either of the same modality or different modalities—is merged (or “fused”) to obtain a more comprehensive environmental model for the perception system. Raw data from individual sensors can be fused pre- or post-scene segmentation, classification or object detection by perception algorithms.

The choice of when in the perception process sensor fusion is performed leads to the concept of “early fusion” (raw sensor data is fused) or “late fusion” (data is fused after perception processing of an individual sensor’s output). The latter approach has the benefit of lower perception system computational requirements, redundancy to individual sensor failure and sensor optionality for the OEM. The former approach has the benefit of richer input data to the neural-network-based perception algorithms but comes at the cost of higher computational load and lack of sensor optionality or failure resilience.

The choice of where perception and sensor fusion processing occurs is a function of the vehicle’s E/E architecture.

Sensing system architectures

As cars continue to require increased functionality and processing performance, the number of semiconductor-enabled electronic control units (ECUs) within a vehicle has dramatically risen, from tens to over 100 today. The E/E architecture is foundational to supporting the functionality and user experience of modern vehicles. This architecture features multiple ECUs, communication networks and protocols, and multi-layered software that enable vehicles to perform multiple functions efficiently and safely, including ADAS.

For automakers, this functionality explosion has created challenges in terms of E/E architecture design complexity, cost, cabling weight, power distribution, thermal constraints, vehicle software management and supply-chain logistics. The industry is moving away from legacy single-function ECU architectures and toward higher levels of ECU consolidation and greater processing centralization through combinations of domain (function-specific), zone (physically co-located functions) and central vehicle compute (a central “brain”) E/E architectures.

In a central compute E/E architecture—which currently integrates multiple SoCs in a single ECU (but may in the future be consolidated into a single SoC) to address the ADAS, infotainment and body function processing—raw data from various ADAS sensors is transported for early fusion perception processing to inform decision-making and any necessary vehicle-motion-related interventions.

While centralized processing can offer theoretical benefits in vehicle software management and updateability, it also presents cost, integration, wiring, thermal and power challenges for OEMs that have a range of vehicle classes in their portfolio. Additionally, the early fusion approach that centralized processing implies locks OEM perception algorithms into a specific sensor set and higher processing needs. As a result, this E/E architecture may be better suited to OEMs that need to address only a small number of premium-only or electric-only platforms and don’t have a broad or legacy vehicle portfolio to support.

A more scalable alternative to the central compute E/E architecture leverages distributed intelligence within the context of zone-based control. Here, a combination of ADAS perception processing at or near the sensor edge—in a zone controller or in a downstream domain controller—enables greater compute flexibility and architecture scalability. The perception processing performed at or near the edge can range from full object classification and detection to simpler data pre-processing. This distributed intelligence approach helps to minimize the volume of multi-gigabit raw sensor data that must be transported across the vehicle, thereby reducing associated wiring cost and weight and easing downstream computing, power and thermal challenges. Crucially, it also allows OEMs optionality and substitution of sensors to scale ADAS across their vehicle classes.

The future of sensing fusion

The journey from today’s vehicles with modest ADAS features and limited autonomy to the longer-term goal of the uncrashable car must overcome considerable technical and commercial challenges—and a scalable approach for OEMs to deliver this safer future is the adoption of distributed intelligence architectures to support ADAS sensing and sensor fusion.