A novel camera loaded with cutting-edge AI algorithms can detect cars and pedestrians around an autonomous vehicle 100× faster than current technology and with far less computational power, according to researchers who developed it at the University of Zurich.

By pairing a 20-fps RGB camera with an event camera and developing neural networks to process the incoming image data, professor Davide Scaramuzza and researcher Daniel Gehrig created a hybrid system that is claimed to be several orders of magnitude more efficient than the cameras used in cars today.

“Hybrid systems like this could be crucial [for] autonomous driving, guaranteeing safety without leading to a substantial growth of data and computational power,” said Scaramuzza, head of the Robotics and Perception Group at Zurich. “Our approach paves the way for efficient and robust perception … by uncovering the potential of event cameras.”

Today’s driver-assistance systems use image-based color cameras that capture 30 to 40 fps, with AI-based computer vision algorithms to deliver safe driving. Optimizing the tradeoff between bandwidth and latency in these sensors isn’t easy: High frame rates are best for capturing scene dynamics with minimal latency but raise bandwidth requirements, while lower frame rates increase the risk that vital data will be missed.

As part of his research with Scaramuzza into low-latency automotive vision, Gehrig, now at the University of Pennsylvania’s General Robotics, Automation, Sensing and Perception (GRASP) Lab, checked out the latest automotive cameras. The highest-frame-rate camera he could find at the time captured 45 images per second but with a latency of 25 ms—a time gap during which a vehicle’s surroundings would not be imaged.

“If something happens during this time, the camera may see it too late,” said Gehrig. “The question is, how can we reduce this time? Well, we could increase the frame rate and get even more images per second, but that translates into more data to be processed in real time—and as we become more reactive to our environment, this [data burden] is becoming more unsustainable.”

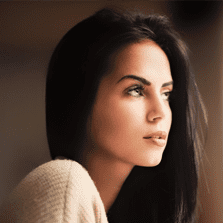

Instead, Gehrig and Scaramuzza have combined a 20-fps RGB color camera with an event camera, which has pixels that record data only when motion is detected, rather than at regular frame rates. “[These cameras] have no blind spot between frames,” Scaramuzza said.

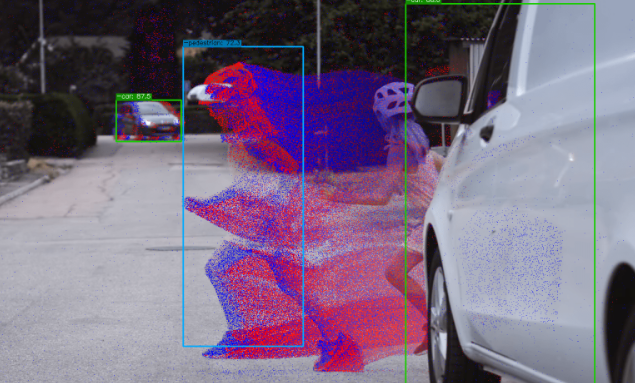

As part of the setup, each camera has been coupled with a neural network algorithm to analyze the captured imagery. Images from the standard camera are processed by a convolutional neural network (CNN) that is trained to recognize cars or pedestrians. The event camera data, however, is processed using an asynchronous graph neural network (GNN), designed by Gehrig and Scaramuzza, that ignores static objects and only processes 3D data that change over time—that is, events.

Each time the standard camera captures an image, its CNN processes that image and shares the features with the asynchronous GNN, which reuses the CNN features to boost its own performance. Event cameras can miss very slow and nearly static events, so CNN feature sharing is helpful when only a few events have been triggered in slow-motion scenarios.

The lab trial results, reported in Nature, indicate that the setup can match the latency of a 5,000-fps camera but with the bandwidth of a 45-fps automotive camera. Those results come without compromising accuracy and offer clear benefits over the best cameras on the automotive market, the researchers reported.

“We have a low data rate with very low latency,” Gehrig said. “The real breakthrough has been how we can efficiently process the data from both [cameras] with our algorithms.”

Gehrig asserted that the algorithms he and Scaramuzza developed give their hybrid approach an efficiency edge over the event-based methods used in commercially available products. Key players with event cameras on the market include Prophesee and iniVation. “I think the challenge has been that event cameras are quite different from ‘normal’ cameras, and this requires a new approach when it comes to processing [the images],” Gehrig said.

But while Scaramuzza’s Robotics and Perception Group has made a name for itself by developing cutting-edge algorithms for cameras, market-ready systems tend to rely on more established, robust algorithms trusted by automakers. Gehrig wouldn’t predict when the hybrid solution might find its way into vehicles but said algorithm optimization would continue apace. “We want to further reduce the time it takes to process [data],” he said.

The left half of this scene is captured by the color camera, while the right half comes from the event camera. (Source: Robotics and Perception Group, University of Zurich)

The researchers also intend to integrate LiDAR sensors—as already used in self-driving cars—into their hybrid camera. According to Gehrig, the current camera has difficulty estimating the distance to an object and may not be able to determine, for example, whether a so-called bounding box—the rectangular label in object-detection tasks—is pointing out a small person nearby or a tall person in the distance.

“This ambiguity can be removed using LiDAR … and is something that I think is crucial to further enhance the usability of our algorithm,” Gehrig said.